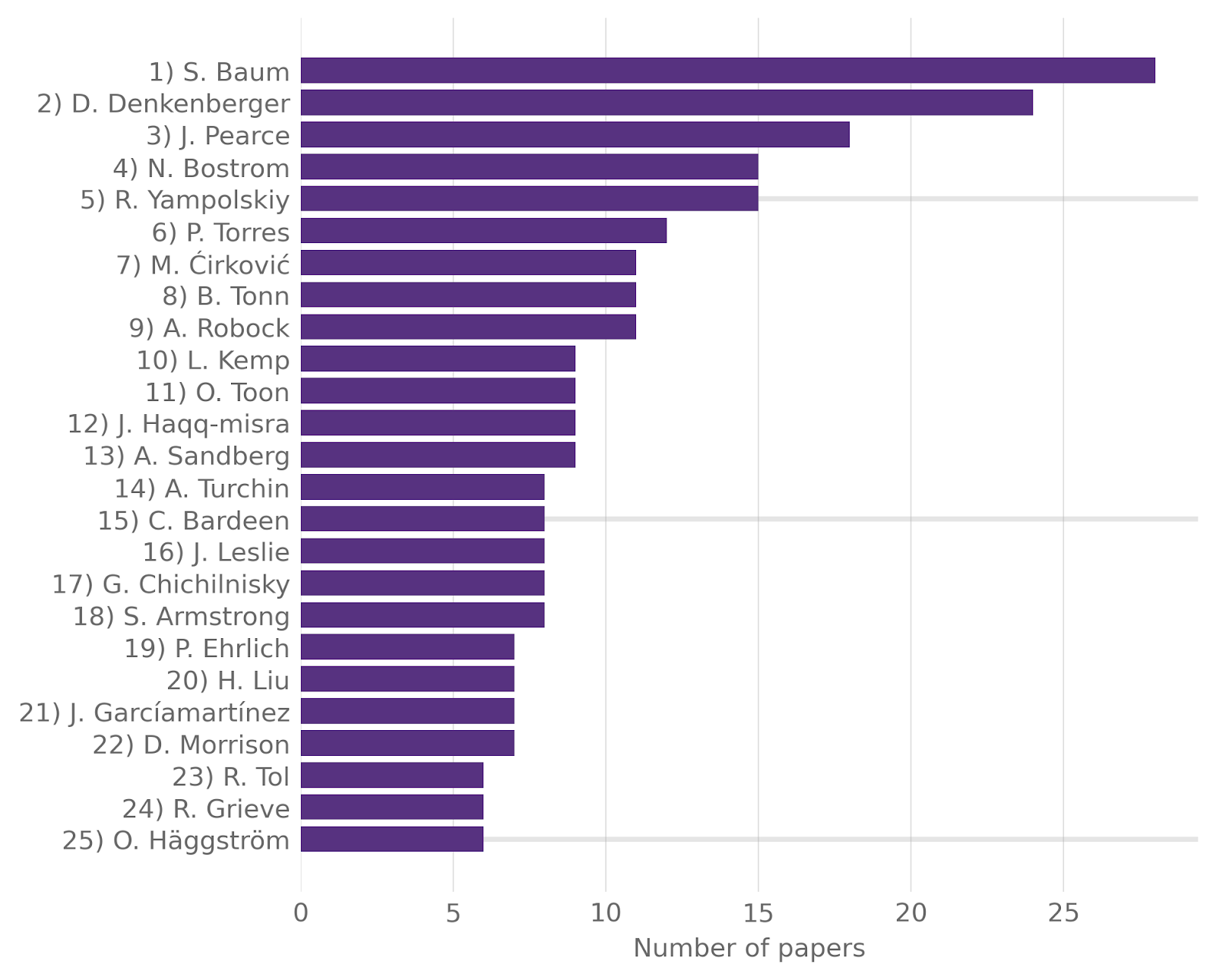

I created a list of researchers in the existential risk field based on the amount of papers they have published. In the following I provide links to their work. This is not meant as a strict evaluation of the value of their contributions to the field, but more as a quick overview of who is working on what. I hope this is helpful for people who are interested in existential risk studies, be it professionally or on a personal basis to understand the main topics that are currently being worked on. I think this could be especially helpful for people coming new to existential risk research and want to understand who the established organizations, researchers and topics are.

This is based on The Existential Risk Research Assessment (TERRA). TERRA is a website that uses machine learning to find publications that are related to existential risk. It is run by Centre for the Study of Existential Risk (CSER) and was originally launched by Gorm Shackelford. I used their curated list of papers and wrote some code that counted the amount of papers per author. You can find the code here and here are the results:

I am sorry for butchering some names, but this was due to the way I had to strip the strings to make them easily countable. As TERRA is based on the manual assessment of automatically collected papers, this list is likely incomplete, but I still think that it gives us a good overview of what is going on in existential risk studies. If you want to improve the data here, feel free to make an account at TERRA and start assessing papers.

In the following I curated a list of all the top 25 researchers with links to their Google Scholar profile (if I could find it), the main existential risk organization they work for and a publication where I think that it showcases the kind of existential risk research they do. If you have the impression that some of the people in the list could be better represented by another publication, please let me know and I will change it. 25 is an arbitrary cutoff, this does not mean that the person on 26 is any worse than the person on 25, but I had to stop somewhere. You can find the complete list in the repository.

Here is the list:

- Seth Baum

- Global Catastrophic Risk Institute (GCRI)

- How long until human-level AI? Results from an expert assessment

- David Denkenberger

- Alliance to Feed the Earth in Disasters (ALLFED)

- Feeding everyone: Solving the food crisis in event of global catastrophes that kill crops or obscure the sun

- Joshua M. Pearce

- Alliance to Feed the Earth in Disasters (ALLFED)

- Leveraging Intellectual Property to Prevent Nuclear War

- Nick Bostrom

- Future of Humanity Institute (FHI)

- Superintelligence: Paths, Dangers, Strategies

- Roman V. Yampolskiy

- University of Louisville

- Predicting future AI failures from historic examples

- Émile P. Torres

- Currently no affiliation, former Centre for the Study of Existential Risk (CSER)

- Who would destroy the world? Omnicidal agents and related phenomena

- Milan M. Ćirković

- Astronomical Observatory of Belgrade

- The Temporal Aspect of the Drake Equation and SETI

- Bruce Edward Tonn

- University of Tennessee

- Obligations to future generations and acceptable risks of human extinction

- Alan Robock

- Rutgers University

- Volcanic eruptions and climate

- Owen Toon

- University of Colorado, Boulder

- Environmental perturbations caused by the impacts of asteroids and comets

- Jacob Haqq-Misra

- Blue Marble Space Institute of Science

- The Sustainability Solution to the Fermi Paradox

- Luke Kemp

- Centre for the Study of Existential Risk (CSER)

- Climate Endgame: Exploring catastrophic climate change scenarios

- Anders Sandberg

- Future of Humanity Institute (FHI)

- Converging Cognitive Enhancements

- Alexey Turchin

- Alliance to Feed the Earth in Disasters (ALLFED)

- Classification of global catastrophic risks connected with artificial intelligence

- Charles Bardeen

- National Center for Atmospheric Research

- Extreme Ozone Loss Following Nuclear War Results in Enhanced Surface Ultraviolet Radiation

- John Leslie

- University of Guelph

- Testing the Doomsday Argument

- Graciela Chichilnisky

- Columbia University

- The foundations of probability with black swans

- Stuart Armstrong

- Future of Humanity Institute (FHI)

- Eternity in six hours: Intergalactic spreading of intelligent life and sharpening the Fermi paradox

- Paul R. Ehrlich,

- Stanford University

- Extinction: The Causes and Consequences of the Disappearance of Species

- Hin-Yan Liu

- University of Copenhagen

- Categorization and legality of autonomous and remote weapons systems

- Juan B. García Martínez

- Alliance to Feed the Earth in Disasters (ALLFED)

- Potential of microbial protein from hydrogen for preventing mass starvation in catastrophic scenarios

- David Morrison

- Ames Research Center

- Asteroid and comet impacts: the ultimate environmental catastrophe

- Richard Grieve

- University of Western Ontario

- Extraterrestrial impacts on earth: the evidence and the consequences

- Richard S.J. Tol

- University of Sussex

- Why Worry About Climate Change? A Research Agenda

- Olle Häggström

- Chalmers University of Technology

- Artificial General Intelligence and the Common Sense Argument

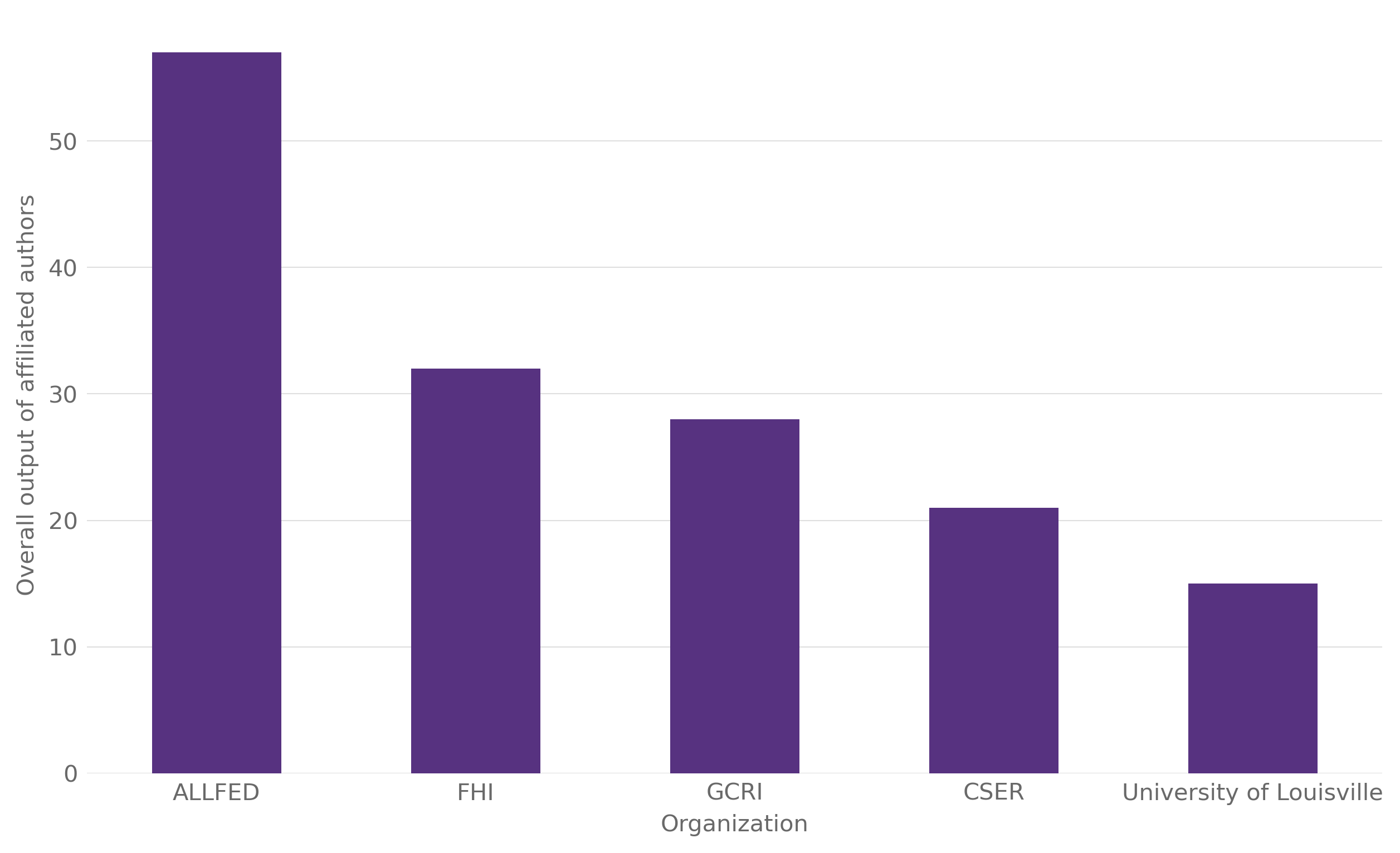

To also have an overview of the overall output of existential risk organization I also added up the publications for all researchers that are at the same organization. This includes double counts, but I still think it is a good way to give a rough approximation of the overall productivity of the organization:

Sharing my personal reflections while categorizing research on TERRA. It appears that the surge in COVID-19 related papers has started to dwindle, seeming to decrease in 2022 compared to 2021. On the other hand, the interest in AI seems to grow. In 2022, there was a noticeable uptick in AI-related papers compared to previous years. It seemed to me that AI-related papers might even constitute around 50% of the papers I categorized as having existential risk potential in 2022, although this is a rough estimate as I lack access to categorized data.

Turning to a particular concern regarding a lack of representation of women (1 out of 25) in the list. It’s unclear what precisely underlies this issue and how we can address it, but it’s undoubtedly a problem. We need to improve this situation. The community should take steps to make it more welcoming and supportive for women to participate, persist, and excel. For instance, initiatives like mentoring programs specifically aimed at women in the context of existential risks could be a good option here.

How to cite

Jehn, F. U. (2023, August 12). The 25 researchers who have published the largest number of academic articles on existential risk. Existential Crunch. https://doi.org/10.59350/j4gxm-1bm60